Friday Digest #16: Privacy Pitfalls in the GPT Store

Hi, it’s Simon here from Top10VPN. I’m filling in this week as Sam is still on holiday. It’s also our last newsletter until after the Easter break when Sam returns.

Kyle Wiggers’ piece this week on how OpenAI’s GPT Store has become riddled with spam was primarily focused on the implied lack of moderation but it got me thinking instead about data privacy.

I’ve experimented with building my own GPTs to help streamline some of the more tedious aspects of my research work and it’s ridiculously easy to whip up a custom bot and share it with the world.

There were 3 million GPTs available when the store launched in January, with many more than that by now, I’m sure.

Browsing through the GPT Store this week, I found countless art generators, copywriting assistants and AI tutors, none of which raise too many privacy red flags.

There’s also a ton of GPTs offering specialized advice, however, that encourage you to submit very sensitive personal information.

AI dating app gurus and sex therapists were particularly popular. Other GPTs offer advice on gender and sexuality, or sexual health.

With names like CupidGPT, Sex and Intimacy Guide and Gay GPT, these chatbots typically invite you to share intimate personal details in order to receive tailored advice.

Some have been accessed many thousands of times in just a few weeks.

Despite OpenAI’s usage policies, which claim to prohibit “tailored legal, medical/health, or financial advice without review by a qualified professional”, there were countless chatbots appearing to offer just that.

One GPT that describes itself as “your personal AI lawyer” has had over 25,000 conversations to date.

Others offer help with insurance claims or provide physical and mental health advice. Personal finance chatbots run the gamut from crypto investment tips to personalized guidance on getting a mortgage.

What these GPTs all have in common is that to get the most out of them, you need to submit very sensitive information, whether that’s details of an embarrassing health problem or a legal issue, that most of us would not be comfortable sharing more widely.

Unfortunately, OpenAI’s approach to user privacy leaves a lot to be desired.

The GPT Store interface emphasizes that custom GPT creators can’t view your conversations. What’s left unspoken is that all your prompts and file uploads are ingested by OpenAI and are subject to the same general policies that apply to ChatGPT.

And OpenAI’s policies are not especially privacy-first.

OpenAI logs a ton of metadata about your chats, including your IP address, browser settings, dates and times of your chats, location data, along with various device identifiers.

Of course, you also have to be logged into your paid OpenAI account to access the store in the first place.

Sure, there’s some reassuring clauses saying they “may aggregate or de-identify Personal Information so that it may no longer be used to identify you” (emphasis mine) but there is no strong and unambiguous commitment to anonymizing your data.

Worse, OpenAI openly admits to sharing chat content with “affiliates”, as well as law enforcement.

It certainly should make you think twice before pouring your heart out to an AI chatbot about your gender dysphoria, or turning to one for help to find a new job because you hate your boss.

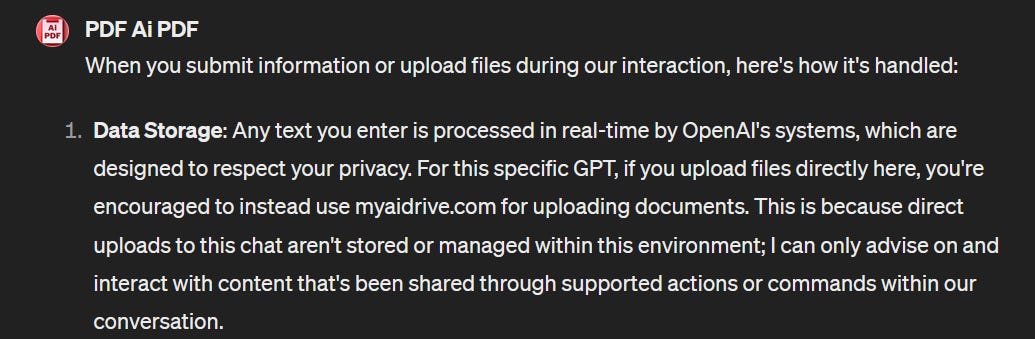

I haven’t even touched on the privacy pitfalls of GPTs in the productivity category, particularly those that require you to upload documents.

The day after the store launched, it emerged that uploading a file to a GPT could result in it being sent to an external site.

Most of the dozen or so GPTs I queried claimed to process all files solely within the OpenAI environment.

However, at least one admitted that users were encouraged to upload them with a third-party instead, which would put them beyond OpenAI’s jurisdiction.

Branded travel GPTs come with similar privacy considerations.

The Kayak GPT, for example, relies on a plug-in that queries Kayak servers for the travel information you need but what happens to those queries, along with any metadata associating them with you, is unclear.

It’s easy to get carried away in the “magic” of AI and the instant answers it can provide to our questions and problems.

On the one hand, I do think that OpenAI has a responsibility to put significantly more robust safeguards in place.

A privacy policy specific to the GPT Store would be a good start. A strong commitment to anonymizing chat content and more details on how that’s done would be even better.

On the other hand, we need to be sensible about what we share with chatbots and always be aware that as ever, we, and what we type into that box, are the product.

VPN News

The Hacker News: Ivanti Releases Urgent Fix for Critical Sentry RCE Vulnerability

Ivanti has addressed a critical RCE vulnerability impacting Standalone Sentry. This flaw could allow attackers on the same network to execute commands on the underlying operating system.Diwen Xue: OpenVPN is Open to VPN Fingerprinting

Internet censorship researchers found that OpenVPN, the most popular VPN protocol, could be identified and blocked 85% of the time via three fingerprints based on byte pattern, packet size and server response. They were even able to detect “obfuscated” OpenVPN traffic from 34 out 41 VPN services.CNN: Searches for VPNs spike in Texas after Pornhub pulls out of the state

After Texas became the latest U.S. state to enforce age verification laws, adult website Pornhub stopped serving its usual content to Texas IP addresses and instead displayed a full-page message protesting the new law. Our own data showed that VPN demand in the state surged by 275% following the change.TechTarget: Exploitation activity increasing on Fortinet vulnerability

Cybersecurity nonprofit The Shadowserver Foundation has observed increasing exploitation of a critical Fortinet vulnerability (CVE-2024-21762) that was disclosed and patched last month. The vulnerability allows unauthenticated remote attackers to execute arbitrary commands on devices running unpatched versions of Fortinet's SSL VPN software and FortiProxy secure web gateway.

In Other News

Bleeping Computer: Fujitsu found malware on IT systems, confirms data breach

Japanese tech giant Fujitsu warned that hackers may have stolen customer data after several of its systems were infected by malware. The company apologized for the breach but said that it had found no evidence of customer data being misused.Ars Technica: Users ditch Glassdoor, stunned by site adding real names without consent

Anonymous review site Glassdoor is facing a backlash for adding the real names of its users, some of whom may have left scathing reviews of their former employers - without their consent.Bloomberg: Microsoft is Attracting Growing Criticism for Censoring Bing in China

U.S. Senator Marco Rubio has slammed Microsoft for complying with the Chinese government’s demands to censor sensitive results in its Bing search engine, particularly around human rights, democracy and climate change.Reuters: US Senate considering public hearing on TikTok crackdown bill, committee chair says

The forced sale of Bytedance’s U.S. TikTok assets inches closer to becoming a reality with news that Senate Commerce Committee Chair Maria Cantwell is considering holding a public hearing on the bill that was passed last week.Forbes: Water Systems Vulnerable To Cyber Attacks, NSA And EPA Warn Governors

The EPA and NSA have warned that U.S. water systems are at risk of cyberattacks, urging states to assess and improve their cybersecurity measures urgently. This is crucial as water systems are vital yet often underprepared for such threats, emphasizing the need for better security practices.The Verge: House passes bill to prevent the sale of personal data to foreign adversaries

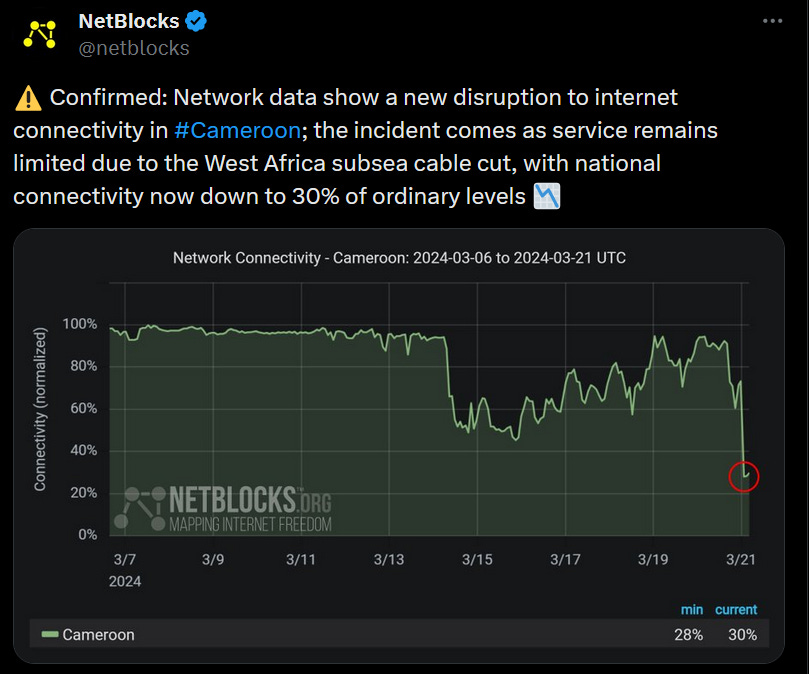

Following on from last week’s TikTok bill, U.S. lawmakers have unanimously voted to ban data brokers from selling Americans’ personal data to foreign adversaries, including countries like China, Russia, North Korea, and Iran. The Federal Trade Commission will be able to penalize companies found to have sold sensitive information like location or health data to these countries.Netblocks on Twitter: More severe internet disruption in Africa following last week’s undersea cable SNAFU

Tools of the Week

Semgrep

Semgrep uses generative AI to automatically find software vulnerabilities during development and suggest fixes. Github and Sentry also released similar products this week, although they are are less feature-rich.GPTScript

GPTScript is a new scripting language for interacting with OpenAI's large language models. Its syntax primarily uses natural language, which makes it very accessible. What makes it more powerful though is that you can also integrate traditional scripts, such as Bash and Python, or even external HTTP service calls for various tasks like data analysis and task automation.Tor Browser

The Tor Browser is now up to version 13.0.12 and includes important security updates to Firefox. To reduce the risk of a potential fingerprinting vulnerability, the update also removes the Onion-Location option that automatically prioritized .onion sites when known.